How Pigeons Paved the Way for Modern AI

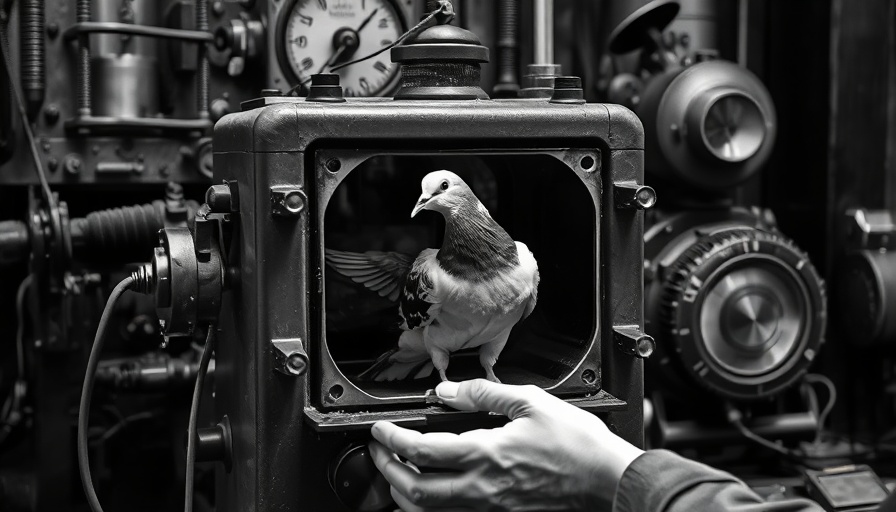

While we often trace the roots of artificial intelligence (AI) to visionary science fiction writers and technology pioneers, it's essential to recognize the unorthodox contributions from the world of animal behavior studies. American psychologist B.F. Skinner's experiments with pigeons in the mid-20th century may seem an unusual precursor to AI development. Skinner's 'behaviorist' theories explored the crucial concept of association—learning through rewards and punishments.

Despite falling out of favor among psychologists by the 1960s, Skinner's research would find new life in the realm of computing. Today, leading AI firms, including Google and OpenAI, draw upon concepts derived from Skinner’s foundational work. By examining how living organisms, including humans, learn and adapt, AI developers can create models that mimic these natural processes, underscoring the interconnectedness of biology and technology.

Redefining Art: Native American Perspectives on Technology

In a striking contrast to the traditional notions of art, many Native American cultures encompass a philosophy that rejects the separation of art from life. With no direct translation for 'art' in most Native American languages, these cultures focus on action and intention instead. An emerging cohort of Native artists emphasizes this principle, viewing their work as a form of ceremony, instruction, and ongoing relationship with their environment.

This innovative artistic approach aligns with the principles of relationship-based systems, presenting a counter-narrative to the often-extractive data intentions prevalent in contemporary tech models. As technology continues to intertwine with cultural narratives, these artists remind us that art can be a living dialogue rather than a static object, challenging the dominant paradigms of both technology and creativity.

The Intersection of Technology and Indigenous Knowledge

As business leaders and technologists navigate the evolving tech landscape, there is compelling reason to integrate insights from Indigenous knowledge systems. The rejection of data extraction in favor of building relationships can significantly reshape how companies approach data ethics and innovation strategies. By listening to these narratives, businesses can foster more equitable technological advancements that respect cultural heritage and promote collaboration rather than commodification.

In this respect, understanding and embracing diverse perspectives in technology can prove invaluable. Companies that leverage this broader vision not only enrich their own innovations but can access new markets and audiences eager to engage with technology that resonates with their values.

The Implications of AI on Business Models

With the rise of AI, businesses must grapple with both its potential advantages and the ethical quandaries it presents. For example, as seen with Anthropic's recent approach to AI models, which include cutting off harmful chatbot conversations, there are significant implications for how businesses can use AI responsibly. This not only protects their models but ensures a safer user experience.

The potential for AI to assist in automating processes, personalizing customer interactions, and analyzing trends is compelling, but it comes with the responsibility to handle data ethically and transparently. In this rapidly changing landscape, companies must adopt a growth mindset that recognizes the value of ethical AI deployment while reimagining existing business models.

Challenges and Opportunities Ahead

The drive towards AI-infused technologies offers immense opportunities for business innovation, but also poses significant challenges and risks. Companies must prepare for potential pitfalls, such as data privacy concerns and the risk of developing technologies that reinforce existing biases. Additionally, the pressure to integrate AI rapidly can lead to hasty decisions that harm both businesses and consumers alike.

However, these challenges can be met with transparency and a commitment to ethical practices. By prioritizing thoughtful deployment strategies and engaging with diverse communities, businesses can navigate these complexities and harness AI's true potential. This will foster an environment where technological advancements can thrive in tandem with cultural values and ethical considerations.

Conclusion: Bridging Innovation and Ethics

As we look towards a future shaped by AI, understanding and integrating the lessons learned from both behavioral science and Indigenous philosophies will be crucial. Businesses that successfully navigate this landscape will not only lead in innovation but will also redefine how technology interacts with culture and society. It is imperative for leaders to engage with these ideas, cultivating a harmonious environment where technology and humanity coexist.

Add Row

Add Row  Add

Add

Write A Comment